Supercharging

Search and Retrieval

for Unstructured Data

🌲 MCP based APIs for your Generative AI Roadmap, with best-in-class embeddings and reranking

Embeddings and Rerankers Drive RAG Retrieval and Response Quality

Unstructured data

Embedding model

Vector DB

Reranker

MCP Server

Your LLM App

Factual responses with lower costs

How to Use

|InfraHive AI

Large language models thrive when powered with clean, curated data. But most of this data is hard to find, hard to work with, and hard to clean. We make it easy.

Quote #1905 - $250,182.00

APPROVAL REQUIRED

Budget Approval request

sent to Abhinav

ACCOUNT PAYABLES

Structured data for your AI Agents to automate AP

Seamlessly integrate with CRMs like Salesforce, HubSpot, or Airtable,with data ready for AI-driven automation.

Automate 2-way/3-way matching against Purchase Requestsusing structured data, streamlining workflows.

Structured data that can be directly plugged into LLMs to optimize supply chain and enable 50% faster order processing.

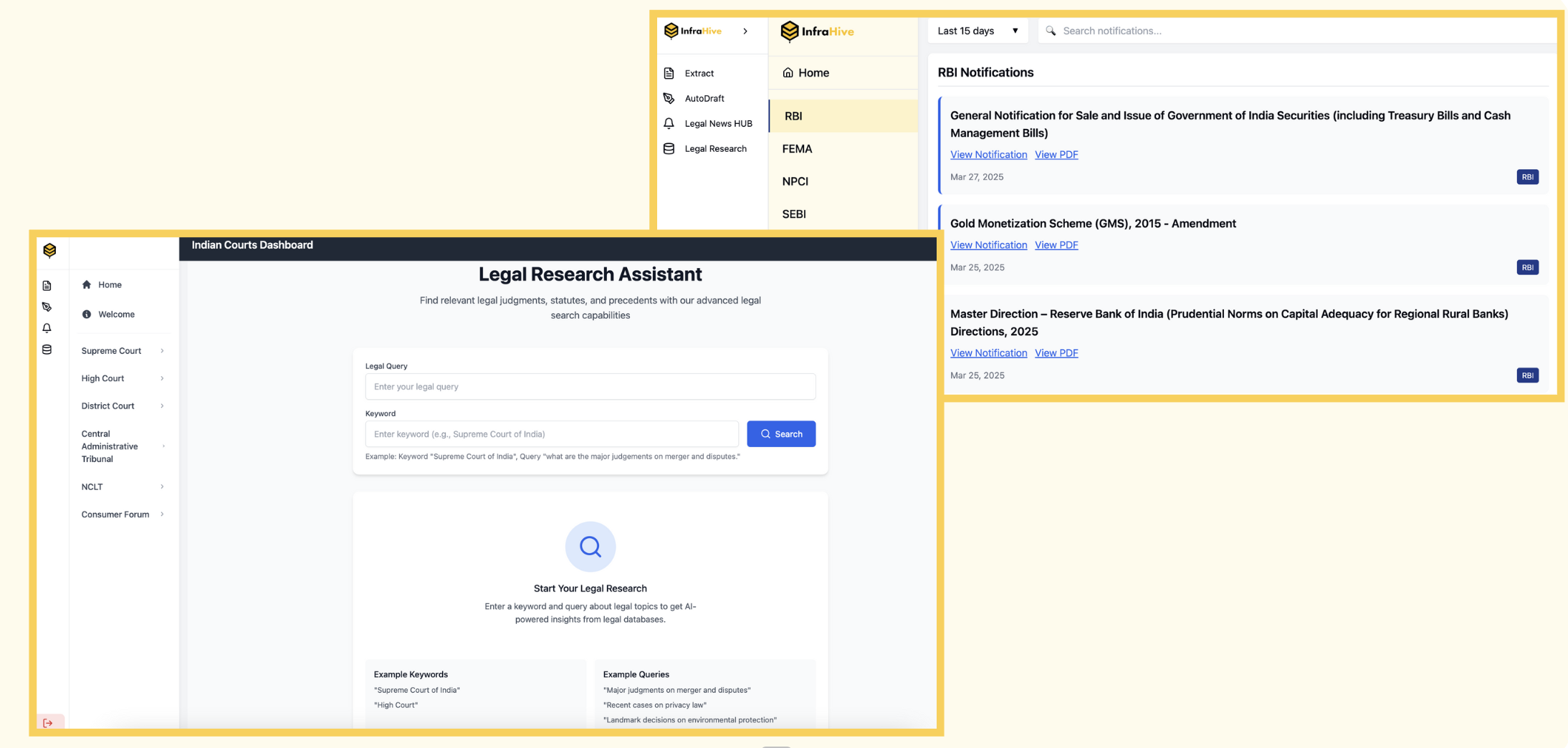

LEGAL

Build AI Agents for Legal Teams

Automate drafting and vetting of legal documents.

Compare and analyze multiple agreements.

Combine internet and internal data for automated legal research.

Transform unstructured data into actionable legal insights.

Powered by Best AI Embedding Models and Engineering

High accuracy

Retrieving the most relevant contextual information

Low dimensionality

3x-8x shorter vectors ⇒ cheaper vector search and storage

Low latency

4x smaller model and faster inference with superior accuracy

Cost efficient

2x cheaper inference with superior accuracy

Long-context

Longest commercial context length available (32K tokens)

Modularity

Plug-and-play with any vectorDB and LLM